Eye Tracking in VR

Ying Wu

September 30, 2019

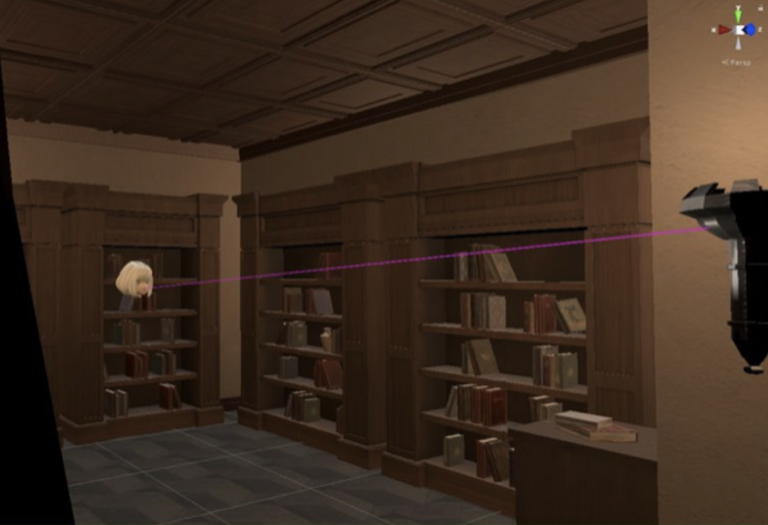

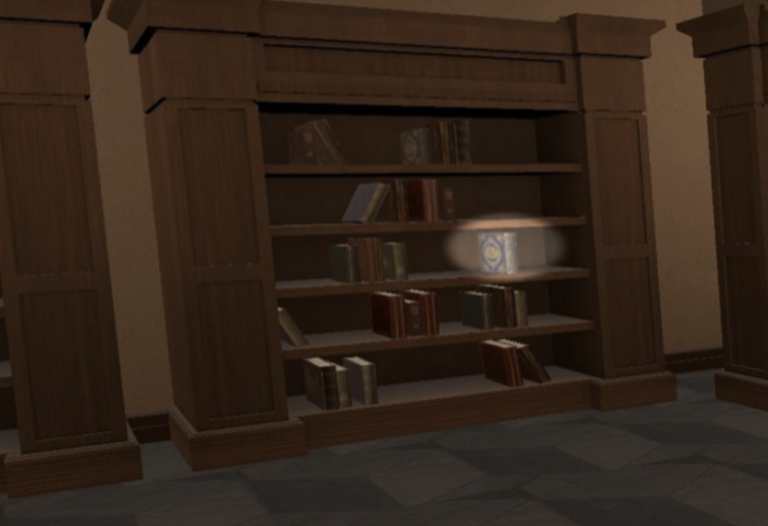

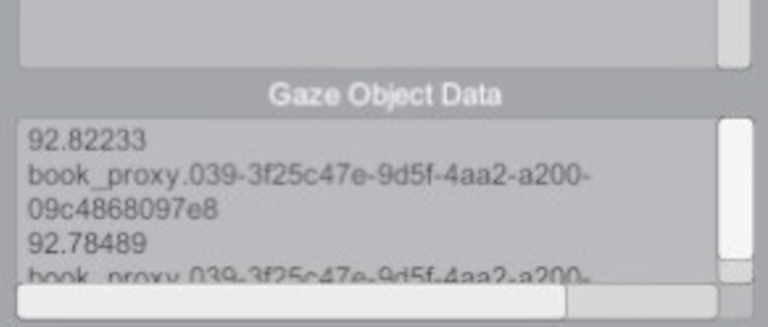

During Escape Room problem solving, eye gaze co-ordinates (x, y, z) detected by the Vive Pro Eye headset are converted into a stream of gaze direction vectors. These vectors are combined with information about the user’s head direction and eye position inside the game world in order to create a ray. In the first image, the player’s head is represented by the avatar, and her line of gaze is represented as the pink ray. In the second image, the spotlight is centered on a fixated object, which in this case, is a book. When the spotlight (or ray) collides with an object (e.g. the book), the unique name of the object and the amount of time spent in contact with the object are recorded (third image). Spotlights and rays are not visible to the user in the VR environment. They are only seen by the experimenter. This information is synchronized with time-series EEG samples and other physiological data streams we developed via Lab Streaming Layer, which we loaded onto GitHub.